The AI visibility sector is evolving at an unprecedented pace, driven by rapid advances in large language model (LLM) capabilities and the growing need for performance optimization. As organizations increasingly integrate AI into their business intelligence and operations stacks, optimizing LLMs has become a crucial factor not just for speed and cost, but also for interpretability and compliance. With 2026 on the horizon, developers, enterprises, and stakeholders are focusing on new optimization trends to remain competitive and agile.

TLDR

As AI visibility becomes a key enterprise focus, leading trends in LLM optimization are reshaping how models are trained, deployed, and monitored. These include ultra-efficient quantization, transparent AI logic, synthetic data fine-tuning, edge deployment adaptations, and the rise of closed-loop feedback systems. Together, these trends are enhancing explainability, reducing computational load, and aligning LLMs more closely with business needs in 2026.

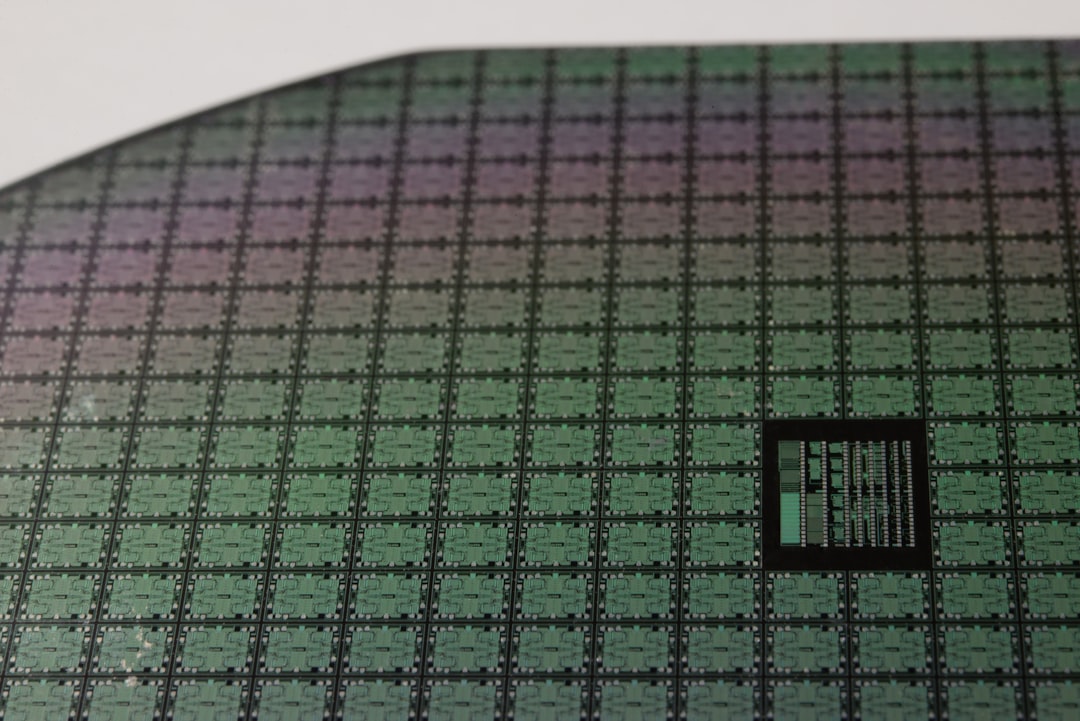

1. Quantization 3.0: Hyper-Efficient Model Compression

Quantization isn’t new in AI model optimization, but the methods predicted for 2026 push the boundaries further. Quantization 3.0 refers to smarter, granular compression that minimizes performance losses even in extremely lightweight formats. By reducing floating-point precision using advanced bit-mapping and hybrid tensor slicing, developers are shrinking model size by up to 80% while maintaining over 95% fidelity in NLP tasks.

This trend not only enhances deployment in low-resource environments but also makes multi-model orchestration significantly more viable. As APIs and services run side-by-side with multiple specialized LLMs, the lowered resource cost is a game changer.

2. Transparent Optimization: Building Explainable Pipelines

With increased AI scrutiny, regulatory bodies such as the EU and FTC are focusing heavily on AI transparency. In response, companies are developing explainable optimizers that not only minimize computational demands but also provide visibility into how and why decisions are made.

Leading LLM optimizers now feature integrated explainability modules that trace model outputs back to transformer weight behaviors, attention shifts, and vector-source alignment. These modules also tag portions of the inference pipeline for compliance auditing, making it easier to meet forthcoming AI governance standards.

Additionally, pluggable transparency kits are being introduced in schema and inference pipelines, allowing for dynamic toggling between full disclosure and enterprise-lite modes depending on user trust levels.

3. Synthetic Fine-Tuning with Smart Data Generation

Relying solely on human-curated datasets is no longer scalable. In 2026, a dominant trend is synthetic fine-tuning—where LLMs are trained on intelligently generated data outputs from other generative models. Using smaller, specialized models (termed “generative shadownets”), developers create custom tuning sets that diversify model insight without incurring real-world data risks.

Benefits include faster fine-tuning cycles, compliance-safe training, and higher domain accuracy for niche knowledge sectors. Furthermore, synthetic fine-tuning allows models to stay updated on emerging topics without major architectural reworks.

4. Edge-Aware Optimization for On-Device Inference

As AI intelligence migrates closer to the user, especially in wearable tech and IoT devices, LLM deployment at the edge is gaining serious ground. Optimizers are now being tailored for hardware-specific edge environments, focusing on:

- Minimal memory footprint

- Fast cold-start times

- Batchless inference paths

This is enabling not just mission-critical integrations (think healthcare or aviation), but also local privacy protections by keeping data and inference on-device.

5. Closed-Loop Optimization Using Real-Time Feedback

In past years, models were optimized during or post-training. But now in 2026, architectures rely on closed-loop feedback systems where optimizer decisions are recalibrated during real-time inference based on user input or environmental response. This involves:

- Feedback-aware attention gate modulation

- Self-adjusting temperature and prompt depth

- Dynamic prompt-engine integration

Such optimizers don’t just refine responses—they strategically modulate system response across usage patterns, enabling adaptive learning at the inference boundary. This real-time adaptability is paramount for conversational agents, assistive AI, and self-optimizing enterprise models.

6. Modular Optimizers with API Mesh Integration

In multi-agent AI ecosystems, LLM optimizers must communicate effectively across frameworks and services. The latest trend is modular optimizers capable of API-level mesh orchestration. They support:

- Selective sharing of activation maps

- Language-specific token scaling

- On-demand plugin augmentation (sentiment, tone, structure)

This allows enterprise visibility layers to overlay LLMs with enhanced control, while enabling adaptive interactions between chatbots, document understanding engines, and custom NLP workflows.

7. User Persona-Aware LLM Optimization

Understanding user personas plays a key role in AI visibility success. In 2026, optimization frameworks embed logic that adjusts transformer layer activations based on inferred or explicitly declared user types. The customizations range from:

- Domain-specific decoding strategies

- Security-level constraints (redaction, mask layers)

- Vocabulary modulation based on demographic indicators

This enables safer, more relevant, and personalized LLM deployment without overhauling base model structures.

The Road Ahead: Integrating Optimization into AI Visibility Stacks

By 2026, optimization is no longer a pre-processing or training-time event. It is an ongoing, visibility-enhancing component that spans inference, compliance, UX, and security. LLMs that embrace optimization as a living, modular ecosystem will dominate AI visibility platforms.

From real-time feedback loops to API-fluent optimizers and synthetic fine-tuning strategies, these innovations are closing the gap between raw AI power and usable, trustworthy intelligence. As enterprises look to de-risk and elevate their AI stack, intelligent LLM optimization is the linchpin.

Frequently Asked Questions

- What is LLM optimization in the context of AI visibility?

- LLM optimization focuses on improving the efficiency, interpretability, and performance of large language models, particularly in scenarios where transparency and real-time usability are critical, such as enterprise dashboards and analytics platforms.

- How does synthetic fine-tuning improve LLM accuracy?

- By generating tailored, high-quality data using smaller generative models, synthetic fine-tuning allows rapid and safe enhancement of LLM capabilities without relying on sensitive real-world data sets.

- Why is explainability important in LLM optimization for 2026?

- Due to rising regulatory standards and ethical concerns, explainable AI is mandatory in many jurisdictions. Optimizers must not only enhance speed but also make decisions traceable and auditable.

- How do closed-loop feedback systems work in LLMs?

- These systems collect user or environmental responses to LLM output and use them to dynamically adjust inference parameters like temperature or token depth in real time, improving output relevance and alignment.

- Is edge deployment of LLMs viable with current optimizer technology?

- Yes, thanks to edge-aware optimizations like quantization and cold-start reduction, LLMs can now operate efficiently on lower-resource devices without compromising essential capabilities or data privacy.