AI development is rapidly transforming the way developers work, explore ideas, and code efficiently. One of the most promising ecosystems for developer-focused AI is Cursor AI—an intelligent code editor designed to work seamlessly with modern AI models. But what if you want to run your own locally-hosted model with Cursor, instead of relying on cloud-based Large Language Models (LLMs) like OpenAI’s GPT-4? Good news — it’s not only possible, it’s becoming easier and more powerful every day.

TL;DR

Cursor AI allows developers to integrate local AI models as coding assistants within the IDE. This guide explains how to configure Cursor to run local models like LLaMA, Mistral, or Code LLaMA using tools such as Ollama or LM Studio. Running local models empowers you with greater privacy, control, and often lower cost, while still maintaining excellent performance. Perfect for developers who want to self-host and customize their coding environments!

Why Use Local Models With Cursor?

As attractive as cloud-based models can be with their high reliability and vast capabilities, they are not always ideal for every use case. Here are a few compelling reasons to consider using local models with Cursor AI:

- Privacy: Local models never send your code or questions over the internet.

- Cost: Hosting locally can help avoid pricey subscriptions or pay-per-token charges.

- Customization: You can select or even fine-tune models that best fit your workflow.

- Offline Access: Develop without an internet connection and still enjoy AI assistance.

Overview of Tools You’ll Need

Before we walk through the setup, let’s cover what tools you’ll need to have in place:

- Cursor AI: The code editor you’ll be working in, available here.

- Local Model Backend: A platform to run models. Popular options include:

- Ollama — A simple, command-line-based model runner.

- LM Studio — A GUI-based local model interface that supports OpenAI-compatible endpoints.

- Local Model Files: Quantized versions of LLaMA, Mistral, or other Transformer-based models—easily downloaded or imported into Ollama or LM Studio.

Step-by-Step Guide to Setting Up a Local Model With Cursor

1. Install Cursor

Download and install Cursor from the official website. Once installed, open the app and sign in to complete your initial setup.

2. Install Ollama or LM Studio

Choose your local model provider:

- Ollama: Download from ollama.com, and install it on your system. It offers CLI commands like

ollama runto start models quickly. - LM Studio: If you prefer a UI, LM Studio can be downloaded from lmstudio.ai. Once launched, it offers model downloads, chat windows, and most importantly, a built-in OpenAI-compatible API server.

3. Download and Run a Model

Once you’ve installed your runner, it’s time to download a model:

- Ollama: Use a command like

ollama run mistralorollama pull codellamato get the model running. Ollama will start a local server on your device. - LM Studio: Download a model through the GUI and start the API server (use port

1234by default). Ensure “OpenAI-compatible API” is running.

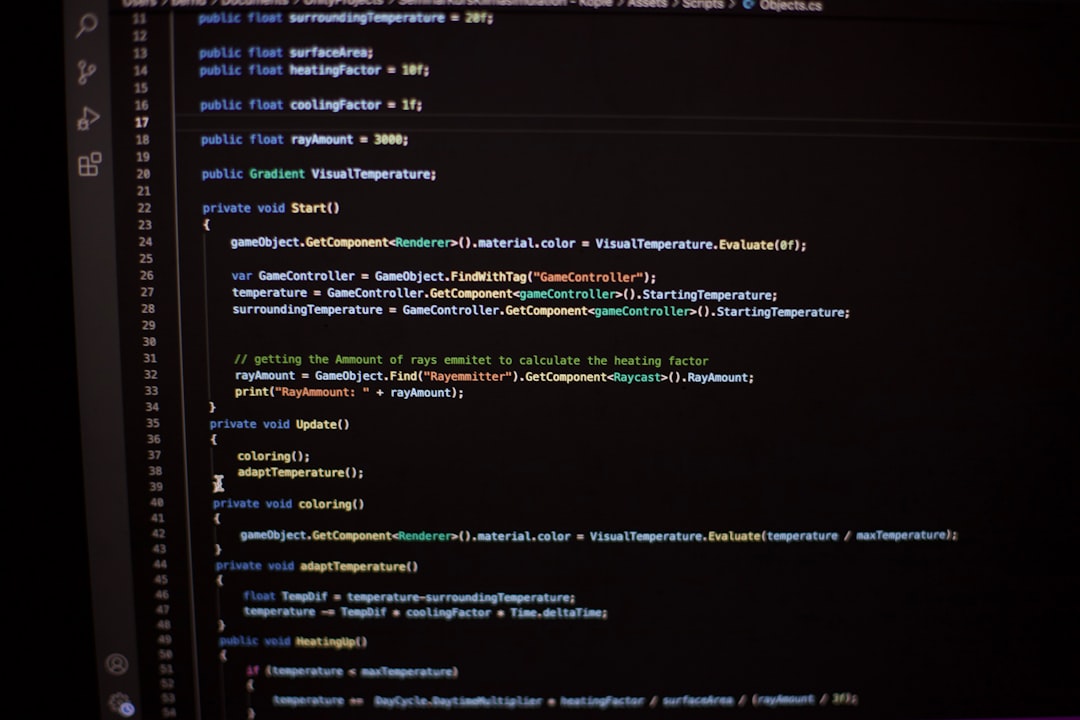

4. Configure Cursor to Use the Local Endpoint

Now that your model is running, it’s time to connect it to Cursor AI:

- Open Cursor’s command palette (

Cmd + Shift + PorCtrl + Shift + P). - Search for “Cursor: Edit LLM Configuration.”

- In the file that opens, change the entry for the provider to a local server. For example:

"defaultProvider": "openai",

"providers": {

"openai": {

"apiKey": "local",

"baseURL": "http://localhost:1234/v1"

}

}

This will reroute Cursor’s LLM queries to the local backend provided by LM Studio (or Ollama if you’re using its REST endpoint).

5. Test and Start Coding

Save the config file, reload the Cursor window, and start using your local assistant! Try asking for code completions or debugging help just as you would with GPT-4. Response times may vary depending on your hardware and the model you selected, but many developers find performance on modern machines to be quite reasonable.

Recommended Local Models for Coding

The quality of your AI experience depends heavily on the model. Here are some top models optimized for code generation and understanding:

- Code LLaMA: A code-optimized version of Meta’s LLaMA model. Great for work in Python, JavaScript, and more.

- Mistral-7B Instruct: A versatile general-purpose model with solid instruction-following capabilities.

- Phi-2: Lightweight, while still offering fast and accurate code completions for smaller machines.

- StableCode: Designed explicitly for developers, with a special focus on task-aware suggestions.

Tips for Optimizing Local Model Performance

If you’re running into lag or inefficiency issues, here are some actionable tips:

- Use a Quantized Model: 4-bit and 8-bit models use less memory and are faster.

- Close Other Intensive Applications: Freeing up system RAM/CPU can improve model performance.

- Adjust Sampling Parameters: Temperature, top_p, and max tokens affect quality and speed. Lower temperature can make results more deterministic.

- Test Several Models: Each model shines in different tasks—don’t rely solely on benchmarks.

When You Might Still Prefer Cloud Models

Despite the advantages of local models, cloud-based LLMs still have strong use cases:

- Large Context Windows: Only cloud LLMs currently support 32k or 128k tokens fully.

- Cutting-edge Instruction Following: GPT-4 and Claude tend to outperform in nuanced conversations.

- Resource Constraints: If your computer struggles with running local models, it may not be worth the hassle.

Many developers ultimately choose a hybrid approach, using local models for routine or private work and switching to cloud LLMs for complex tasks.

Conclusion

Using local models with Cursor AI is a powerful and accessible way to take control of your development workflow. Whether you’re motivated by privacy, cost, or just the fun of experimentation, local LLMs are more powerful than ever—and integrating them into Cursor takes less than an hour. With just a few tools and some tweaks to your configuration file, you can code with confidence and intelligence, on your terms.

So give it a try, explore your preferences, and enjoy the flexibility of building AI into your dev environment — locally and securely.